#20 Running LLMs on Your Local Machine...

The Beginner's Guide to Local LLMs: Jan AI, LM Studio, and More.

Hello, this week is all about Local LLMs because I want you all to try running LLM on your local machine. Yes! If you haven’t already, today, I am going to share the ways I tried and what does it feel like and any difference from online LLMs.

Yes, I am talking about a Large Language Model

And LLM does not only mean Open AI’s ChatGPT, Google’s Gemini there are number of open-source models that outperform these in certain tasks.

I strongly believe, LLMs are soon going to become just like our browser extensions, we can plug and start using for each of our daily tasks.

Why should you care about local LLMs?

Data Privacy and Security — whatever you chat/prompt it’s completely sitting in your own machine and no one is collecting them and training the model on your prompts.

Offline Availability — you don’t need internet once you downloaded LLM on your machine and it can complete your tasks that doesn’t need web/internet. (many tasks can be completed without internet, think about it!)

Lower Latency — faster responses because tokens per second takes very less time because the model is in your home, your computer and not on cloud (server sitting in some nearest region of yours)

The best part is open-source models, truly contributing and enabling ways for us to utilize most of LLM capabilities on a local machine and no need to pay for cloud compute to run these models.

Everyday and every week there are new models popping up unlocking new range of parameters and trained on trillions of tokens of data.

And No! we are not training or fine-tuning any model today, we are just chatting with an LLM that will be running on your machine.

Let’s suit up!

so there are 3 best ways we could do this:

Terminal (CMD)

Jan ai

LM Studio

While the terminal option using commands is more fun, I am saving it for another day but today I want to share two of the coolest products I have used recently.

Let’s begin with Jan AI

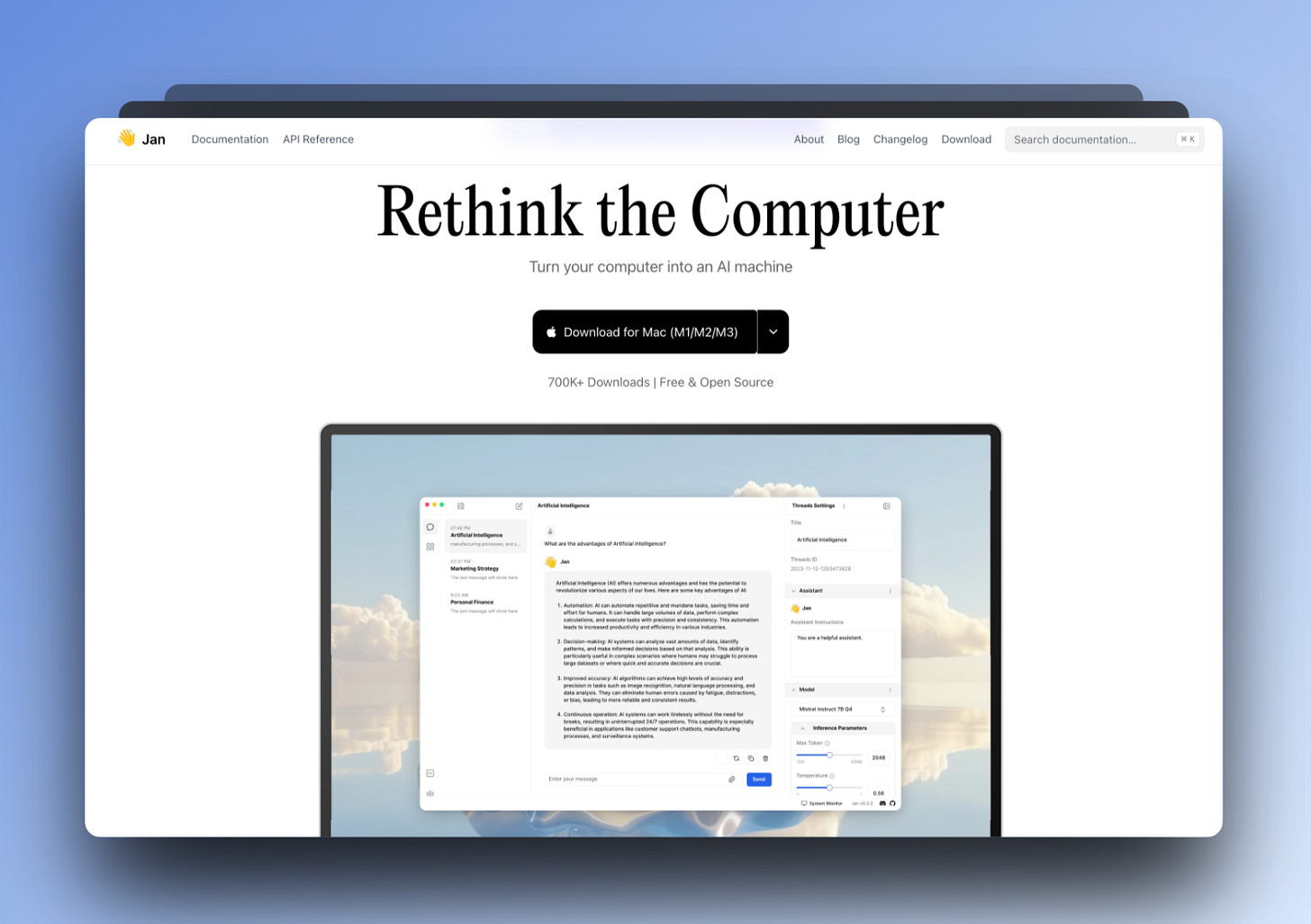

“Jan AI is a platform that enables you to run self-hosted local AI”

You can also connect to Open AI from your local machine using their API Key through Jan but our primary focus here is to install LLM into your system and chat with it.

Download Jan AI here: https://jan.ai/download

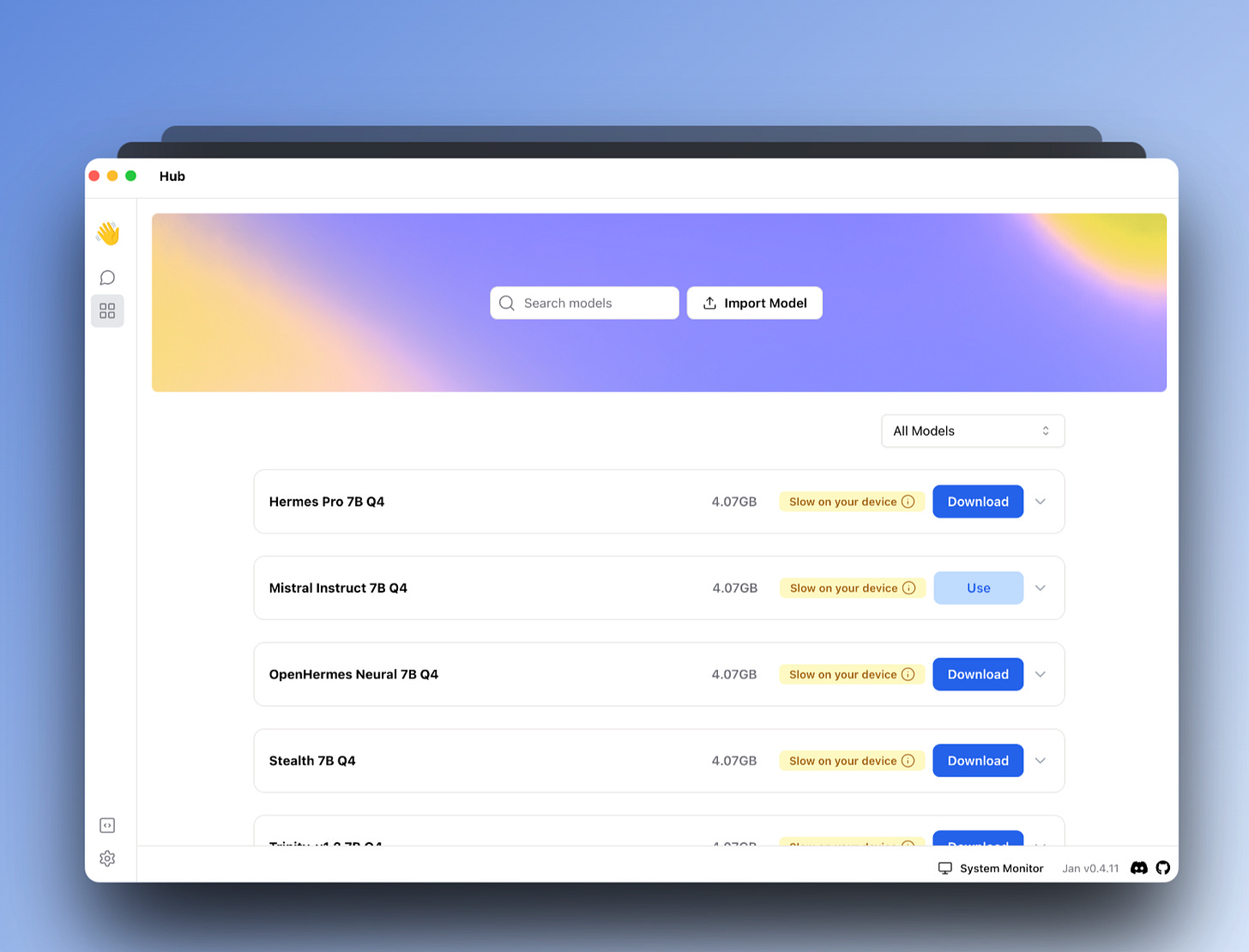

Select and download a Model (I tried with mistral-ins-7b-q4)

Jan AI can identify your system resources and recommend which model can best run on your machine

because each model has a different size parameters and based on your machine’s RAM, memory, CPU resources, you can choose to install one

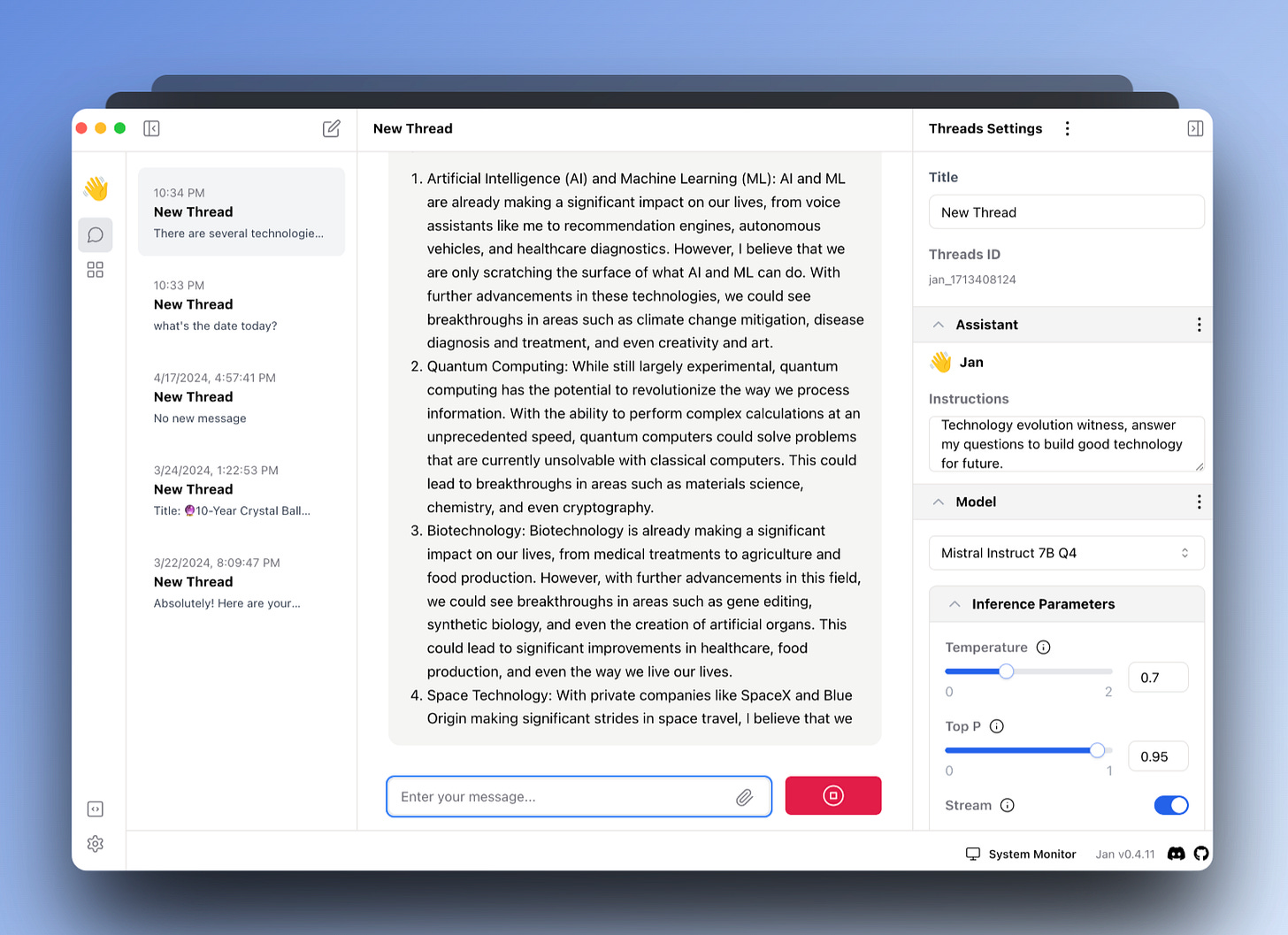

Click on new thread to chat with this model

Jan AI can do more than enabling LLMs on your local machine and they have an amazing community on discord also. If you are interested, learn more about them here.

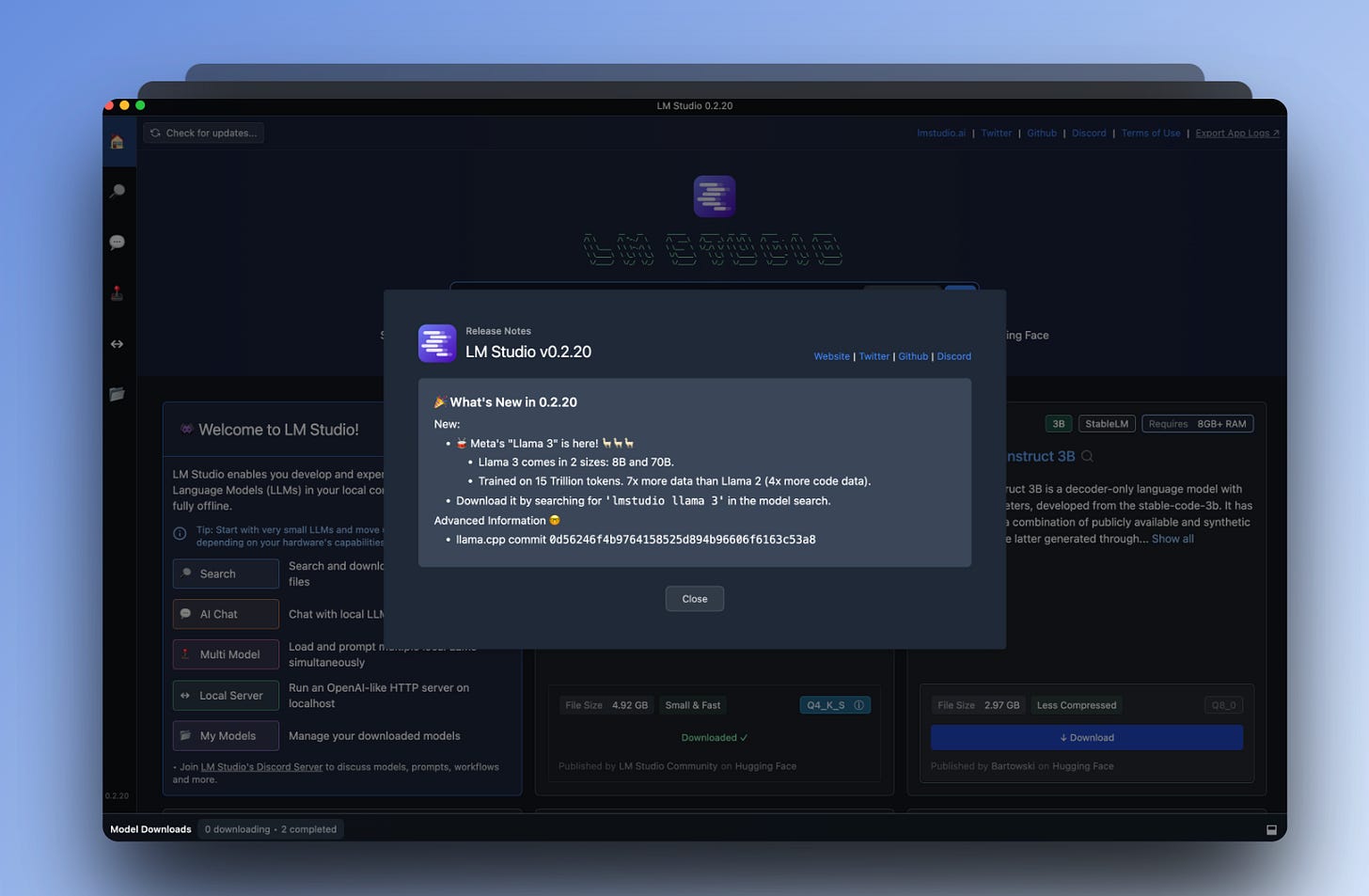

2nd option is LM Studio — similar to Jan AI, LM studio got a lot of traction recently among AI and developers community.

Here also you can install a LLM as per the resources available on your machine and start using it.

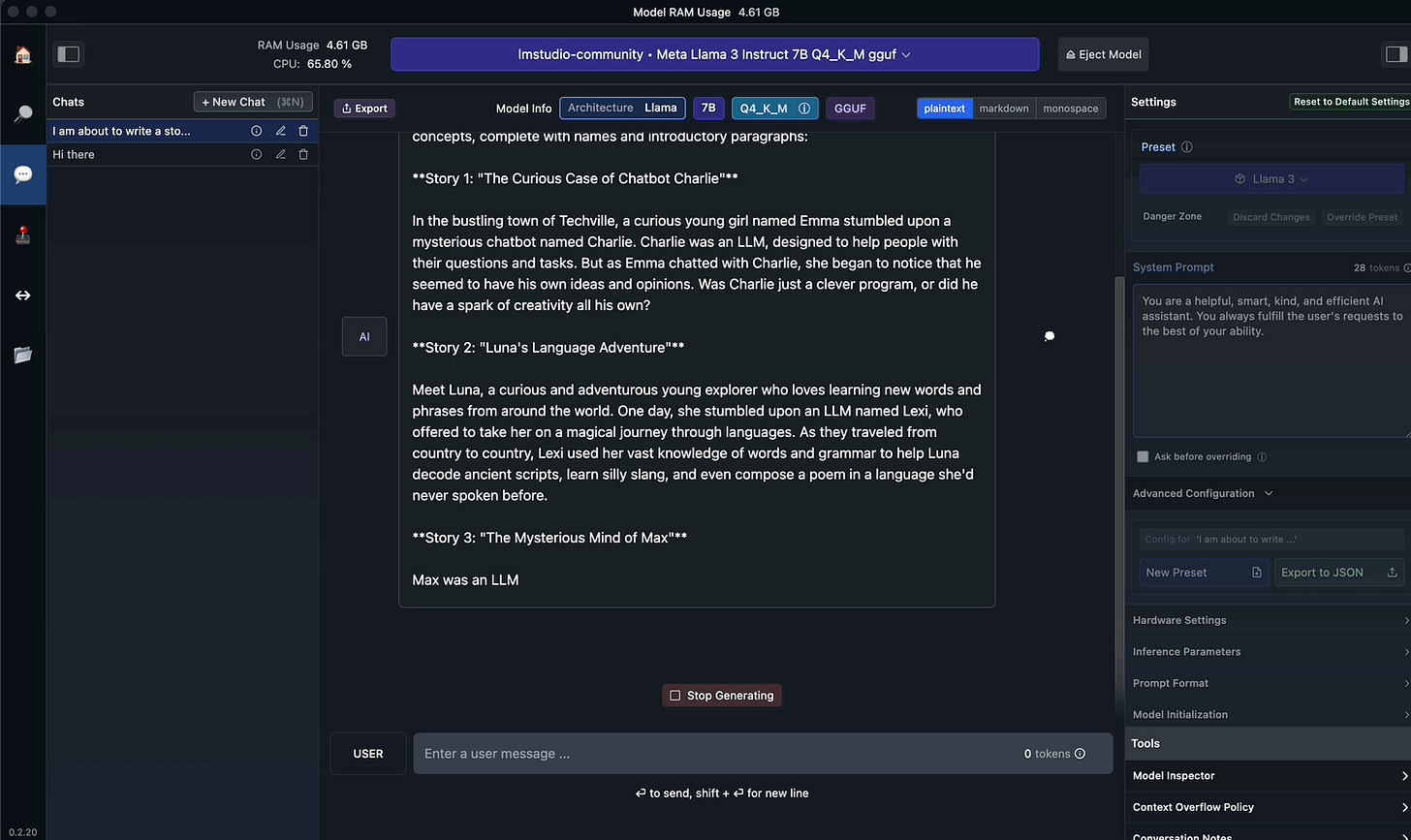

3️⃣ I upgraded my LM Studio yesterday to support Meta’s Llama 3 🦙

Llama 3 comes in 2 variants 8 billion (8B) and 70 billion (70B) so I tried Meta-Llama-3-8B-Instruct which is of 4.92GB

Fun Fact: Meta Llama 3 is called as an open-weight LLM (not open-source)

I asked Llama 3 to come up with a storybook for kids to teach them about LLMs as a sleep story and here is the result

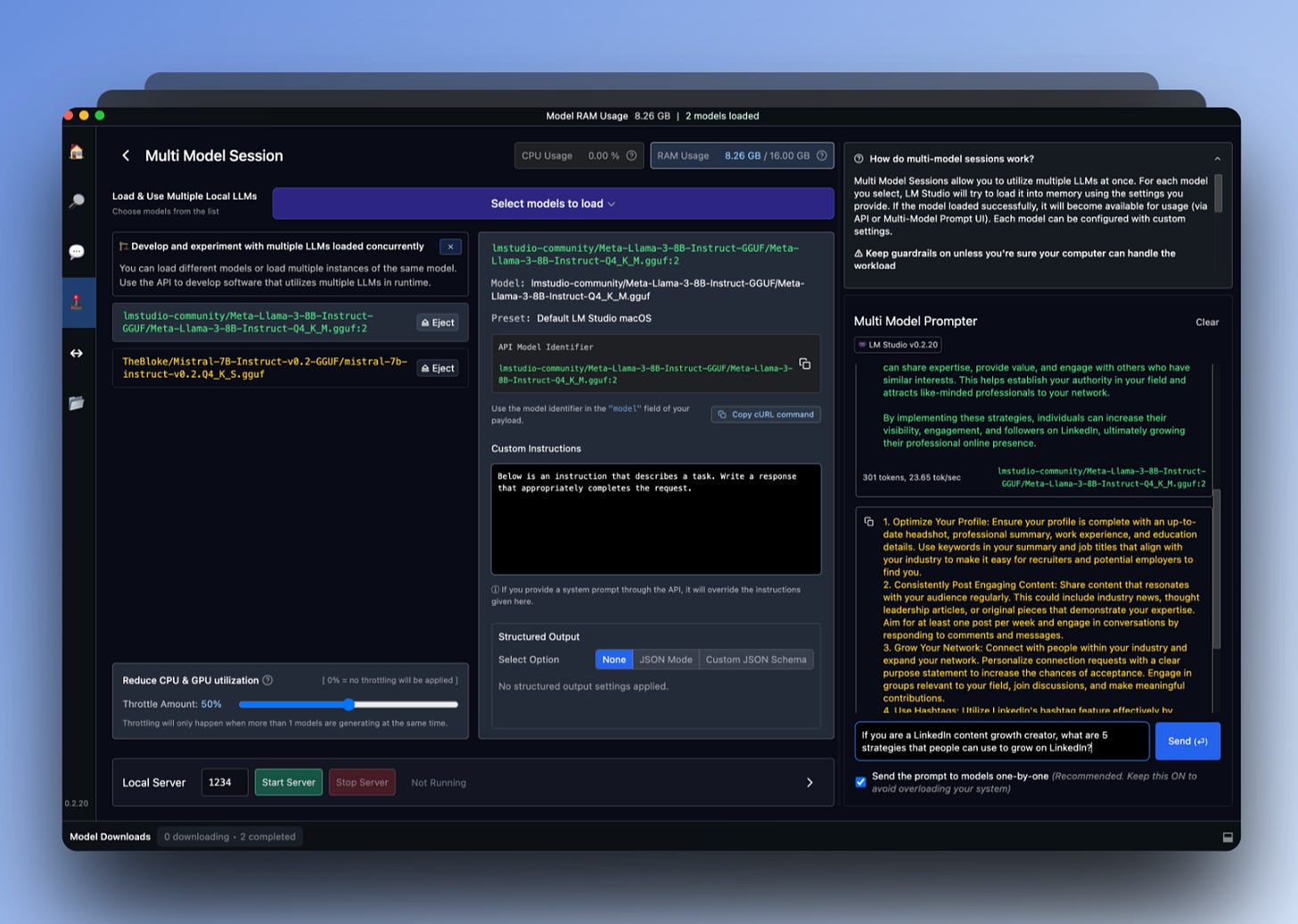

2️⃣ The best part about LM Studio is you can compare and run multiple LLMs at a time on your machine (please make sure you have sufficient resources to run like these, LM Studio shows how much RAM each model is being consumed)

Green color is for Meta-Llama-3-8B and Yellow is for Mistral-7B-Instruct

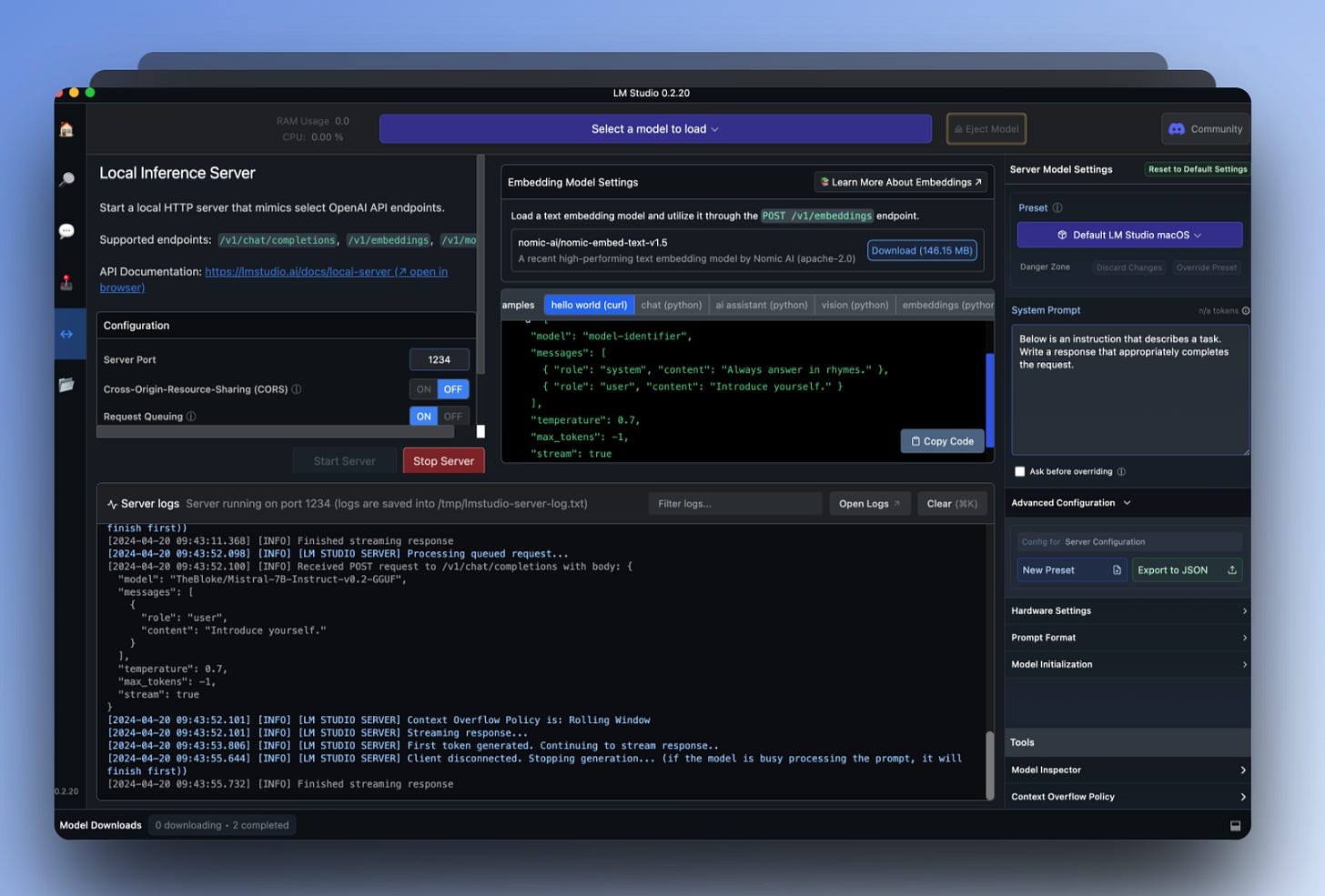

1️⃣ And finally, you can also create an instant API Server to build APIs on top of your local LLMs

so I gave it a try and LM studio itself becomes a host of this API and we can trigger this:

I genuinely felt running LLMs on my own machine is fun and safe if I don’t need anything from internet which obviously there are other tools like Perplexity to do that and even ChatGPT is not connected to internet today.

Conclusion

LLMs are part of AI evolution and I believe AI is going to be integrated to our devices’ OS (Windows, Android, iOS, Mac, Vision OS)

Of course, we have AI deeply rooted in many products we use daily in the form of prediction, analytics, recommendation systems etc.., but I am excited about the evolution of LLMs because of their

Text-to-text LLM

Text-to-Image LLM

Multimodal Large Language Models (MLLMs)

Anyone who knows the language (English) can build products with AI.

Internet has saved a lot of time when it all started and at the same time, it shaped new ways of building technology and consuming it.

This cycle is going to repeat with LLMs in AI.

If you want to learn how exactly the Gen AI works as it all started with Transformers here is the best explanation:

Gen AI Explained With Visual Storytelling

This local LLM adventure has just begun!

Let me know your thoughts in the comments below. Have you tried local LLMs? What are your experiences?

Share this post with your friends and network communities interested in AI and experimentation.

Get Your Hands Dirty - Dive into the world of local LLMs! Explore the tools and resources I shared above, and let me know what you think!

Thank you once again for reading my newsletter and your support means a lot to me!