Meta has just released their groundbreaking Llama 4 models, setting new records and pushing the boundaries of what's possible in AI. Let's break down these impressive models and what they mean for the future of AI.

Llama 4 Scout (109B)

Llama 4 Scout features 17 billion active parameters with 16 experts, totaling 109 billion parameters. Its most revolutionary feature is the unprecedented 10 million token context window, dwarfing previous models' capabilities.

What this means for AI's future:

Document processing revolution: Organizations can now process entire libraries of documents simultaneously, enabling more comprehensive analysis and insights.

Code understanding at scale: Developers can input entire codebases for analysis, debugging, and optimization.

Research synthesis: Scientists can analyze vast collections of research papers to identify patterns and connections that might otherwise be missed.

This massive context window represents a quantum leap in AI's ability to maintain coherence and understanding across extremely long inputs, potentially transforming how we interact with large bodies of information.

Llama 4 Maverick (400B)

Maverick features 17 billion active parameters with 128 experts, totaling approximately 400 billion parameters. It offers a 1 million token context window and is inherently multimodal.

What this means for AI's future:

Multimodal reasoning: The ability to seamlessly understand both text and images opens possibilities for more natural human-AI interaction.

Visual problem-solving: From medical diagnosis to architectural design, Maverick can analyze visual data alongside textual information.

Creative applications: Content creators can describe concepts textually while providing visual references, receiving cohesive outputs that integrate both.

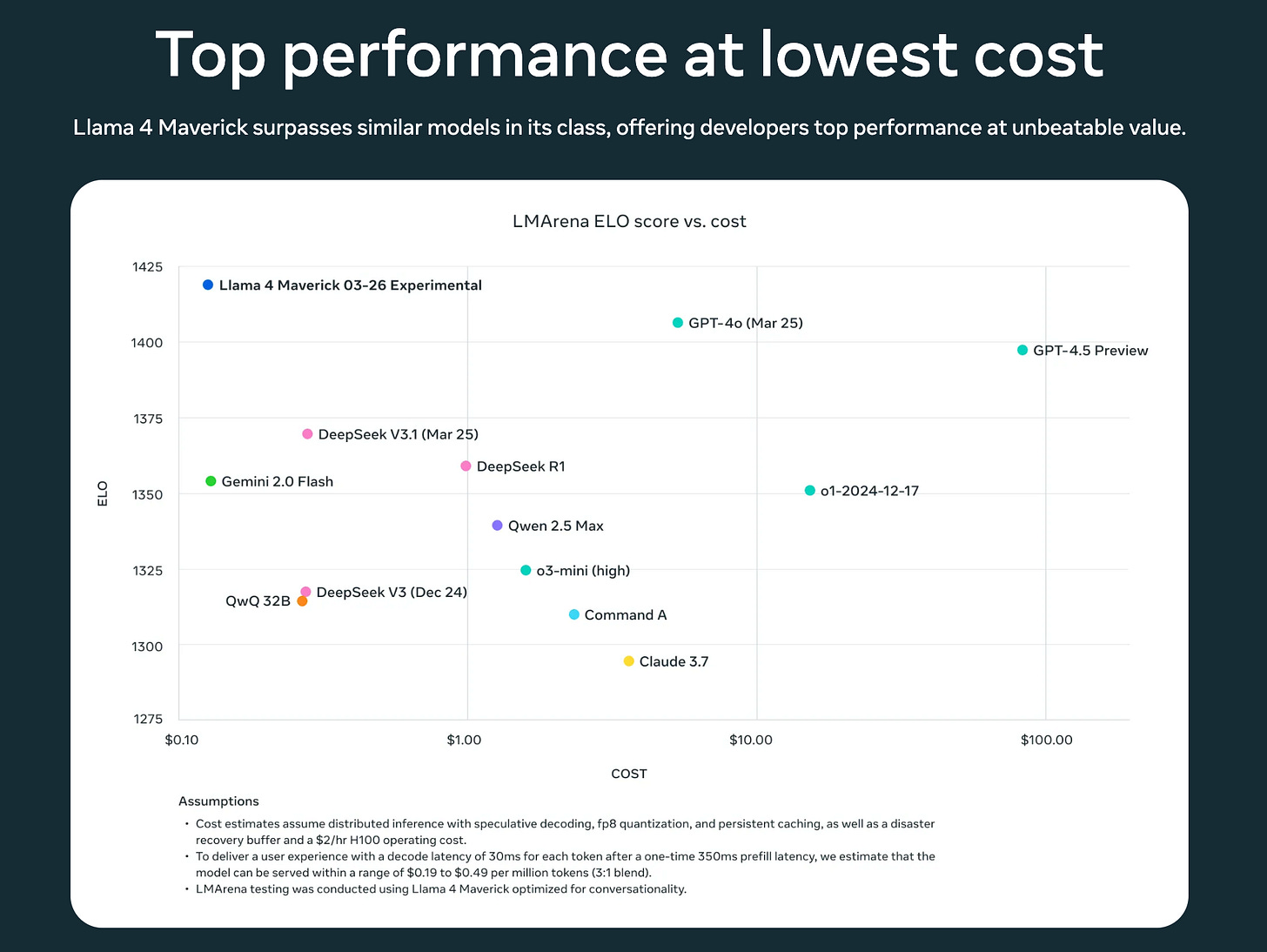

The combination of multimodality and significant parameter count positions Maverick as a formidable competitor to models like GPT-4o and Gemini 2.0

Llama 4 Behemoth (2T)

Still in training, Behemoth features 288 billion active parameters with 16 experts, totaling nearly 2 trillion parameters. Early benchmarks suggest it outperforms Claude Sonnet, GPT-4.5, and other top models.

What this means for AI's future:

New performance ceiling: Behemoth could redefine what's possible in AI reasoning, creativity, and problem-solving.

Enterprise transformation: Large organizations could deploy customized versions for industry-specific applications that require deep expertise.

Scientific breakthrough potential: The model's reasoning capabilities could help tackle complex scientific challenges by identifying non-obvious connections.

The Mixture-of-Experts Architecture

All Llama 4 models use a mixture-of-experts (MoE) architecture, activating only a fraction of parameters for each token. This makes them significantly more compute-efficient for both training and inference.

What this means for AI's future:

Democratization of advanced AI: More efficient models mean lower operating costs, making advanced AI more accessible.

Environmental impact reduction: Less compute-intensive models reduce energy consumption and carbon footprint.

Specialized reasoning: Different expert modules can specialize in different types of reasoning, potentially leading to more nuanced understanding.

Native Multimodality

The Llama 4 models incorporate early fusion to seamlessly integrate text and vision tokens, enabling joint pre-training with unlabeled text, image, and video data.

What this means for AI's future:

More natural interaction: AI systems that understand the world more like humans do, processing multiple types of information simultaneously.

Reduced hallucinations: Visual grounding can help reduce hallucinations by anchoring language generation to visual reality.

New application domains: From augmented reality to autonomous systems, multimodal AI opens possibilities for applications that weren't feasible before.

The release of these models marks a significant milestone in AI development, with Meta clearly positioning itself at the forefront of open-weight model development.

The competition is intensifying, with DeepSeek R2 expected to launch soon in response.

For developers and AI enthusiasts, these advancements represent not just incremental improvements but transformative capabilities that will likely open entirely new categories of applications and use cases.

The future of AI is multimodal, context-aware, and increasingly accessible.